But, grasping the actual concept of the technology underneath the jargony layer of varying refresh rates, frame caps/ drops, input latency, stuttering, screen tearing, etc., is not so easy. So, whether it should be enabled or disabled remains perplexing for many gamers.This article tries to address what exactly adaptive sync is, how it helps one’s gameplay, and whether it should be turned on or off.

What is Adaptive Sync?

Adaptive sync is a standard protocol for display technologies to synchronize with input frame rates. It provides a royalty-free framework for displays to transition among a range of refresh rates as per the content displayed. The technology promises to overcome visual artifacts and helps to commercialize(also standardize) Variable Refresh Rates(VRR) in monitors.Adaptive sync was originally developed and regulated by VESA (Visual Electronics Standards Association) and is provided for free to the member companies. And based upon it, companies like AMD, NVIDIA, etc., have upgraded the adaptive syncing tech to work with GPUs more optimally.

How Does Adaptive Sync Help Smoothen Visuals?

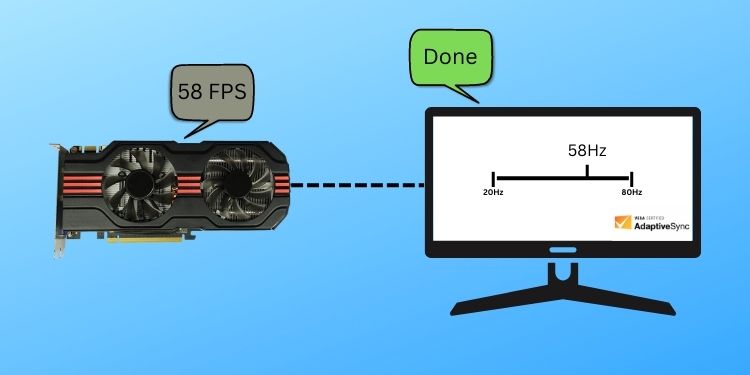

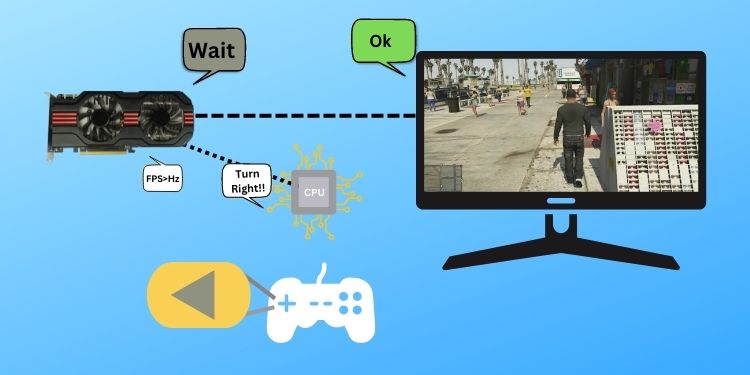

Before you decide whether to use adaptive sync or not, knowing insights on how Adaptive Sync synchronizes with display would be crucial. So, let us begin with the function of the display. Something that’s being displayed on your screen is a set of moving or refreshing frames/pictures. The rate at which a monitor can refresh display frames is known as the monitor’s refresh rate. In other words, a 30hz refresh rate in a monitor means it can change 30 frames/pictures on its display in a second. But, the content that a monitor display has its own frame rate. Videos might have a 60 fps playback speed, which means 60 frames are to be shown in a second. And for games, a GPU renders frames in real-time, which can highly vary depending upon a game, a scene within it, and the capacity of the GPU itself. The difference in the monitor’s refresh rate and the content’s fps creates various visual artifacts like screen tearing and stuttering. If fps is higher than the refresh rate, the monitor displays two frames at once, with a hard borderline cutout called screen tearing.To cope with it, Nvidia created V-Sync (Vertical Sync), which limits the GPU to match render speeds with the refresh rate. But it costs gamers in the form of increased input latency as the GPU won’t render any further frames exceeding refresh rates, regardless of user input. Then, V-sync was improved and succeeded as Fast sync by Nvidia to lower the input latency.Synchronizing techs like V-sync and Fast sync would work well for videos and constant frame rates. But as mentioned earlier, the frame rates aren’t consistent at all while gaming. Simple or partially pre-rendered scenes would render swiftly. But, in the case of a 3d scene that consists of a higher number of polygons, the GPU will take time to render it.As the FPS lowers, the monitor halts on some frames or displays a single frame multiple times to match the refresh rate, resulting in stuttering. And adaptive sync is the technology created to overcome these very varying problems. With its use, companies are able to make refresh rate-changing displays.Now, a capable monitor can maintain a refresh rate per the rendering speed given by GPU and shift accordingly during variation. This helps avoid stuttering during lower fps and saves power by adapting minimal refresh rates when the visuals are stationary.

Should You Turn Adaptive Sync ON or Off?

Adaptive sync reduces input latency massively more than traditional sync technologies but doesn’t completely eliminate it. That means the input latency can sometimes be noticeable for competitive games where even a half millisecond counts. So, you can turn the feature on while using it for video playbacks and playing high-end games with visually intense gameplay. Also, it would work well with optical flow accelerators and AI-based vertical blank space fillers.If the optimum refresh rate of a monitor is maxed out by the FPS constantly (possible with powerful GPUs), adaptive sync is of no use. In such cases, many games provide settings to cap the maximum frames it would render. Capping its fps somewhere near the refresh rate will help avoid the exceeding fps problem. And then, turning on adaptive sync would help to align fps with refresh rate during fps drops.

Free Sync and G-Sync

Free Sync is an adaptively synchronizing software technology that was developed to utilize the refresh rates controller in Displays for power-saving modes. Based on Display port 1.2a primitively, later it was expanded to other ports like HDMI. The technology was developed and maintained by AMD and is a free-to-use tech.AMD’s Enhanced Sync, if enabled, turns off Nvidia’s V-Sync and Fast Sync when the fps drop below the refresh rate. Then the Free-Sync comes into play to handle the contrast between fps and refresh rates.Nvidia G-Sync is an integrated Hardware module embedded into the monitor combined with an integrated software solution, which aligns refresh rates with the frame rates. The technology is only supported by monitors with Nvidia’s proprietary hardware unified into their display.But even with a G-Sync module, in cases of GPU FPS exceeding refresh rates, using Fast sync would help to synchronize both.